This series of 5 lessons comes from our Taccle3 partners in Salamanca. Lessons in Spanish can be found here || Ideas y recursos en español.

1. Overview:

The aim of this activity is to train students of compulsory secondary school in computational thinking applied to Ethics. To do so, the lesson plan focuses on the ethical implications of programming self-driving vehicles to perform actions that will have moral consequences when a crash is unavoidable and damage and harm will be certainly provoked. Students should be able to analyse, represent, study possible outcomes and reflect on ethical behaviours of machines and “program” such behaviours as response to certain inputs, according to ethical moral principles.

Age: 14-16 years.

Level: medium – advanced.

21st century skills: computational thinking, decision making, logical reasoning, ethical discussion.

2. Aim of the lesson

This lesson is intended to develop skills in young people related to computational thinking, logical reasoning and algorithmic decision making. This will be done by the analysis and study of different ethical approaches and the formalisation of their main principles to theoretically program machines to perform ethical behaviours. In addition to these “computational” skills, students will become aware of the relevance of Ethics and ethical approaches not only as guiding principles for our daily life decisions, but also to discuss and try to achieve a consensus on some socially accepted moral principles to decide how intelligent agents should behave, both in their interactions with humans and other machines and the environment.

3.Tools and resources

MIT Media Lab: Moral Machine. http://moralmachine.mit.edu.

ClaimMS: AccidentSketch.com. http://draw.accidentsketch.com.

AV-DMEC Framework (see practical activity).

Matthieu Cherubini: Ethical autonomous vehicles. https://vimeo.com/85939744.

Kahoot: http://kahoot.it.

4.Practical activity

The current lesson plan will be structured in 5 sessions, as follows:

Session 1. Introduction and Moral Machine platform.

The first session will be devoted to introduce students to the challenges involved in programming self-driving vehicles, not only due to technical issues but also (or maybe mainly) to ethical difficulties.

The first activity will consist on asking students to read a newspaper article and discussing in groups the differences between autonomous vehicles and present cars, as so as the ethical consequences of letting cars decide themselves: who is responsible for the damage caused by the car? How should you feel if you know that in certain circumstances the car will be willing to kill you instead of killing others?

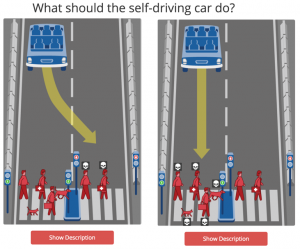

The second activity will let students decide how should a car behave under determinate conditions. To do so, they will be asked to visit the MIT Lab Portal Moral Machine, http://moralmachine.mit.edu (see Figure 1), where they can browse, design and judge different scenarios, and then compare their responses with those of other people

Figure 1. Moral Machine portal. Judging function.

Session 2. Analysis of pre-defined scenarios

During the second session the instructor will split the classroom in groups and will provide students with some pre-designed scenarios (as shown in Figure 2, for example). Then, they will analyse, discuss and decide how the car should behave according to some of the ethical approaches studied in previous lessons, properly re-defined to fit into these particular actions.

After that, each group will explain to the rest of the classmates the scenario received to study, as so as the possible outputs according to different ethical approaches. Finally, they will discuss each scenario in classroom, trying to reach an agreement regarding the output and ethical approach that offers the “better” solution, if possible.

Figure 2. Pre-defined scenario to discuss in classroom

(Iyad Rahwan. http://www.popularmechanics.com)

Session 3. Student-designed scenarios (i)

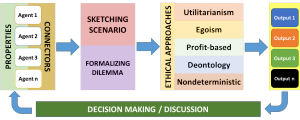

During the third session students will be asked to develop in groups their own scenarios for machine ethical decision making by retrieving data from the matrix in Table 1 and planning the ethical decision making process according to the Autonomous Vehicle – Decision Making in Ethics Classroom (AV-DMEC) framework shown in Figure 3. To do so, they will start selecting the number and nature of agents involved in the scenario. Then, they will provide the agents with some properties for completing and clarifying the actions to analyse. Later on, they will sketch the scene by using a free tool like AccidentSketch.com, http://draw.accidentsketch.com. They should also describe the scene with logical propositions using connectors and natural language (for example: Car A is cutting the road of AV; AV cannot stop in time to avoid collision AND will run over the motorcyclist OR crash into a barrier OR run over two pedestrians on the sidewalk).

Table 1. Matrix with sample elements for developing car crash scenarios

| Agents | AV-car, Bus, School bus, Motorcycle, Cyclist, Pedestrian, Obstacle, Traffic light, […] |

| Properties | Red/green light, Child, Baby, Pregnant woman, Old man/woman, Wrong way, Same way, Slower/Faster, with/without helmet, Correctly/incorrectly crossing, Crash, Stop, Run over, […] |

| Connectors | AND, OR, IF, THEN, ELSE |

| Ethical approaches | Utilitarianism, Egoism, Profit-based, Deontology, Nondeterministic |

Figure 3. AV-DMEC framework

Session 4. Student-designed scenarios (ii)

After verifying that the sketch and the logical propositions match and clearly describe the scenario, students will be asked to analyse the possible outputs taking into account different ethical approaches. After studying and discussing such approaches, they should select the better ethical approach according to the most desirable output and explain their reasons.

Session 5. Discussion and feedback

The last session will be devoted to discuss some issues implied within the results of sessions 3 and 4. For example: is there any ethical approach that is generally preferable? Are there scenarios where it should be impossible to determine a “better” output? Are there scenarios where none of the ethical approaches seem to provide with a reasonable solution?

In order to introduce students to complex computational thinking and ethical decision making processes, they will be invited to watch the video Ethical autonomous vehicles, https://vimeo.com/85939744, where Matthieu Cherubini illustrates two case studies (scenarios) under three different ethical approaches. Students will be invited to analyse the ethical algorithms and the formal representation, as shown in http://research.mchrbn.net/eav.

Finally, students will be asked to participate in a game-based learning competition for assessing what they should have learned using a Kahoot test (http://kahoot.it) prepared by the instructor.

5.Assessment

The aim of evaluating the learning experience is twofold: on the one hand, assessing students’ performance in understanding, defining (both visually and linguistically) and ethical decision making regarding this topic. To do so, the instructor will take note of the experiences and outputs, discussions and argumentations by students, and will guide them to better and more accurate logical and ethical reasoning when these are not being carried out properly. On the other hand, it is necessary to assess the satisfaction of students with the learning plan itself, how did they feel and to which extent they improved the capacity to logically analyse moral dilemmas and to apply different ethical approaches to different scenarios they have created. This will be done by a set of questionnaires developed with Kahoot.it! (http://kahoot.it) and a group final reflection, followed by a short individual essay to be handed to the instructor. In this way the learning activity itself should be evaluated and improved in subsequent iterations.